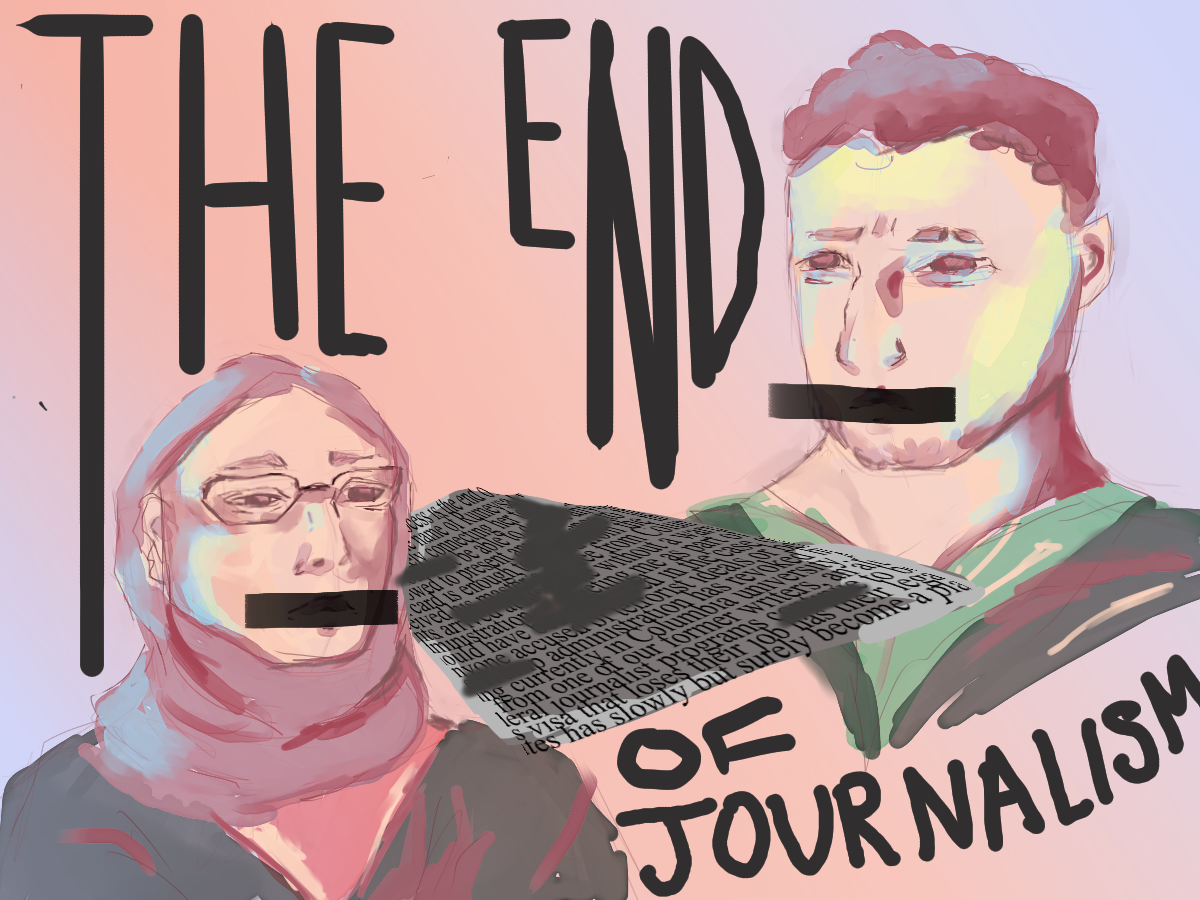

Since the introduction of Florida’s Benchmarks for Excellent Student Thinking writing exam, student essays are only being scored by a person if their computer-generated grade has a low confidence score. Artificial Intelligence–referred to as Automated Scoring when used to grade student essays–should not be used to score writing exams that are of major importance to students because of the processes’ lack of transparency and the AI model being used.

In a random sample conducted by the Florida Department of Education, low confidence scores were found to be less than half of the responses. Therefore, the majority of writing exam results are not being overseen by a human grader.

Since 2014, Florida’s essay writing exams have been graded at least partially by a grader and partially by a computer. However, up until 2022, the essays were scored by a human grader and a computer, with the computer score being used only to check the human score and refer the essay to scoring by another human grader if necessary. The computers were a failsafe to prevent human error, and the computer-graded scores were not part of students’ final scores, which is how Automated Scoring should be used.

Prior to exams being solely graded by the Automated Scoring model, the AI was trained by human graders. The actual number of student essays graded by humans that were used to create the Automated Scoring model has only been described as “large” by the FLDOE and refers to no specific statistic. This is incredibly important information because the grading will likely be of poor quality if a small dataset was used. The FLDOE refuses to give concrete data to back up their Automated Scoring system.

While previously only a theoretical issue, Texas’ 2024 writing exam–known as the State of Texas Assessments of Academic Readiness exam–had its human graders almost entirely replaced by AI. The AI scoring model that was used by the Texas Education Agency was programmed on only 3,000 responses. In 2022, Texas had 5 million students enrolled, causing many teachers to be concerned about the credibility of the scoring because of the small dataset used.

The FLDOE has released no information regarding the dataset it was programmed on, and thus, it is impossible for an outsider to determine whether Florida’s Al scoring model can adequately score students’ essays or not. The only other information given is that they used “Florida student responses that were previously scored by at least two humans,” which insufficiently supports the claim that it is an effective system.

AI programmed to learn from human responses is also prone to learning human biases. This phenomenon has been seen in the underrepresentation of people of color in medicine due to AI, as well as less women being hired because of AI algorithms. Both of these problems are due to the inadequate representation of said people in datasets, which caused AI to develop a bias toward one group over another.

A similar effect can be seen in writing scores graded by AI. In a paper presented at the annual meeting of the National Council on Measurement in Education, researchers Mackenzie Young and Sue Lottridge found bias between limited English proficient and non-LEP students, supposing that the unequal scoring could be due to differences in language patterns.

This matters in Florida especially, where one in 10 public school students were identified as “English learners” in 2019. We cannot allow a system which discriminates against the writing of English learners to exist in a state full of them.

Additionally, rather than encouraging creative writing, the grading of state writing assessments by AI encourages students to write formulaically. In a review of strategies for validating computer Automated Scoring, it is said that it causes “the over-reliance on surface features of responses, the insensitivity to the content of responses and to creativity, and the vulnerability to new types of cheating and test-taking strategies.” AI works entirely on pattern recognition and cannot measure reasoning, correct use of evidence, valid arguments or organization and clarity, all of which a student may include in an essay.

Schools have switched to Automated Scoring because of the high cost of paying human graders to score students’ essays by hand. For example, the Texas switch went hand-in-hand with a new test format that would have required more human scorers and therefore, would have cost millions more. There was no clear information, however, about where the money from cutting corners would go or if it would be used to invest in Texas schools at all.

The addition of AI to everything, from computers to search browsers to education, is also based on profit. For example, in California, school districts are signing more contracts for AI tools, including Ed for $6.2 million for two years, Magic School AI for $100 per teacher per year and QuillBot for $1800 per school.

Florida will soon follow in California’s footsteps, with the state’s Legislature already having agreed to spend 2 million dollars on grants for middle and high schools to increase the prevalence of AI in education. It will be used for things such as drawing up lesson plans, coaching students in writing in different languages and explaining the process of solving math equations.

Educators and students have little idea of the true effect of Automated Scoring on test scores because of the short time it has been in place. Similar to the push for standardized testing in 2001, this will also cause teachers to educate students on how to write an essay that will be scored well by AI, instead of teaching them strong writing and creativity.

This can and will cause education to suffer as it did in the past. Therefore, Automated Scoring should not be used in exams that can determine a student’s class placement and should not be a determinant of a student’s writing proficiency. Instead, teachers should be at the forefront of education, with standards and testing that will support their ability to teach students. Education needs to be transparent with students about the technology they use.